The race to build the first useful quantum computer continues apace. And, like all races, there are decisions to be made, including the technology each competitor must choose. But, in science, no one knows the race course, where the finish line is, or even if the race has any sort of prize (financial or intellectual) along the way.

On the other hand, the competitors can take a hand in the outcome by choosing the criteria by which success is judged. And, in this rather cynical spirit, we come to IBM's introduction (PDF) of "quantum volume" as a single numerical benchmark for quantum computers. In the world of quantum computing, it seems that everyone is choosing their own benchmark. But, on closer inspection, the idea of quantum volume has merit.

Many researchers benchmark using gate speed—how fast a quantum gate can perform an operation—or gate fidelity, which is how reliable a gate operation is. But these single-dimensional characteristics do not really capture the full performance of a quantum processor. For analogy, it would be like comparing CPUs by clock speed or cache size, but ignoring any of the other bazillion features that impact computational performance.

The uselessness of these various individual comparisons were highlighted when researchers compared a slow, but high-fidelity quantum computer to a fast, but low-fidelity quantum computer, and came to the conclusion that the result was pretty much a draw.

It gets even worse when you consider that, unlike classical computers, you need a certain number of qubits to even carry out a calculation of a certain computational size. So, maybe, IBM researchers thought, a benchmark needs to somehow encompass the idea of what a quantum computer is capable of calculating, but not necessarily how fast it will perform a calculation.

How deep is your quantum?

The IBM staff are building on a concept called circuit depth. Circuit depth starts with the idea that, because quantum gates can always introduce an error, there is a maximum number of operations that can be performed before it is unreasonable to expect the qubit state to be correct. Circuit depth is that number, multiplied by the number of qubits. If used honestly, this provides a reasonable idea of what a quantum computer can do.

The problem with depth is that you can keep the total number of qubits constant (and small), while reducing the error rate to very close to zero. That gives you a huge depth, but, only computations that fit within the number of qubits can be calculated. A two-qubit quantum computer with enormous depth is still useless.

The goal, then, is to express computational capability, which must include the number of qubits and the circuit depth. Given an algorithm and problem size, there is a minimum number of qubits required to perform the computation. And, depending on how the qubits are connected to each other, a certain number of operations have to be performed to carry out the algorithm. The researchers express this by comparing the maximum number of qubits involved in a computation to the circuit depth and take the square of the smaller number. So, the maximum possible quantum volume is just the number of qubits squared.

To give you an idea, a 30-qubit system with no gate errors has a quantum volume of 900 (no units for this). To achieve the same quantum volume with imperfect gates, the error rate has to be below 0.1 percent. But, once this is achieved, all computations require 30 or fewer qubits can be performed on that quantum computer.

That seems simple enough, but figuring out the depth takes a bit of work because it depends on how the qubits are interconnected. So, the benchmark indirectly takes into account architecture.

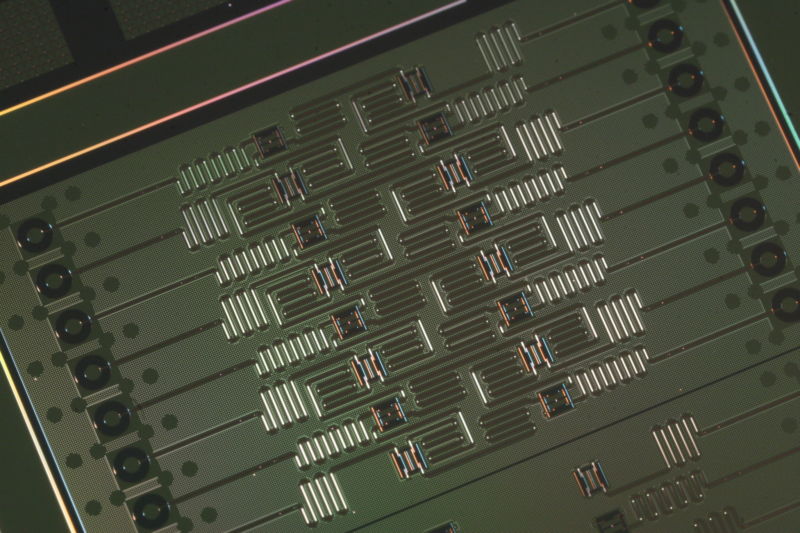

The idea is that the minimum number of operations required to complete an algorithm occurs when every qubit is directly connected to every other qubit. But, in most cases, direct connections like that are not possible, so additional gates or qubits have to be added to connect qubits that are distant from each other. But each gate operation comes with the chance of introducing an error, so the depth changes.

The researchers calculated the error rate that would be required to obtain a certain quantum volume. The idea is that many computations can be broken up into a series of two-qubit computations. Then, for a given qubit arrangement (the connections between qubits), you can figure out how many operations it takes to perform a two-qubit operation between every qubit. From that you can figure out the required depth, and the minimum error rate.

And, actually, the results are not too bad—if you like to make fully interconnected qubit systems. Then you end up with error rates that, depending on the number of qubits, are around 1 per 1,000. But, the penalty for reduced interconnections is severe, with circuits like the latest IBM processor requiring at least a factor of ten better error rates than a fully connected quantum computer. That is if you believe the calculation. Unfortunately, if you compare the calculated error rate, the number of qubits and the quantum volume, the results are inconsistent. We've reached out to IBM and will update when they respond. Unfortunately, when you read the scale wrong, you get inconsistent results. Once you correct for reader error, it all works out fine.

To put it in perspective, gate fidelities in IBM's 5 qubit quantum computer are, at best, 99 percent. So, one operation per 100 goes wrong. And that quantum computer is not fully interconnected. And, indeed, if you perform the calculation, the quantum volume is 25, which requires an error rate on the order of one percent, which approximately agrees with the observed capabilities. If IBM's newly announced 17-qubit quantum computer has the same gate fidelity, then it will have a quantum volume of 35, a small increase on the five-qubit system. To get anywhere near the maximum of 290, the IBM crew will have to increase the gate fidelity to about 99.7 percent, which would be a significant technological achievement.

And, this is where the new benchmark comes in very handy. It gives researchers a very quick way to estimate technology requirements. With some rather simple follow-up calculations the advantages and disadvantages of different architectural choices can be quickly evaluated. I can imagine quantum volume finding quite widespread use.

reader comments

39